APEX

Building and Perfecting a Machine Learning Testing Engine

Overview: Designing for Machine Learning

As Lead UX Designer for Best Buy's in-house Machine Learning (ML) testing engine, APEX, I tackled a critical challenge: ML tests frequently failed without clear indicators of data source errors, leading to significant inefficiencies and delays.

APEX users needed robust monitoring to track data accuracy and reliability throughout the ML test lifecycle. My role was to transform this complex problem into an intuitive user experience.

Key Opportunities:

- Preventing 1 in 3 ML test failures due to unmonitored data.

- Reducing investigation/fix time (50% of failures took 3-10 days).

- Proactive error identification to enhance product reliability.

- Simplifying complex data visualization for quick assessment.

Project Goals:

- Enable real-time ML test health monitoring.

- Provide clear alerts for critical data pipeline errors.

- Improve efficiency for developers and data scientists.

- Centralize decision health information for easy access.

Process: Leading a Google Design Sprint

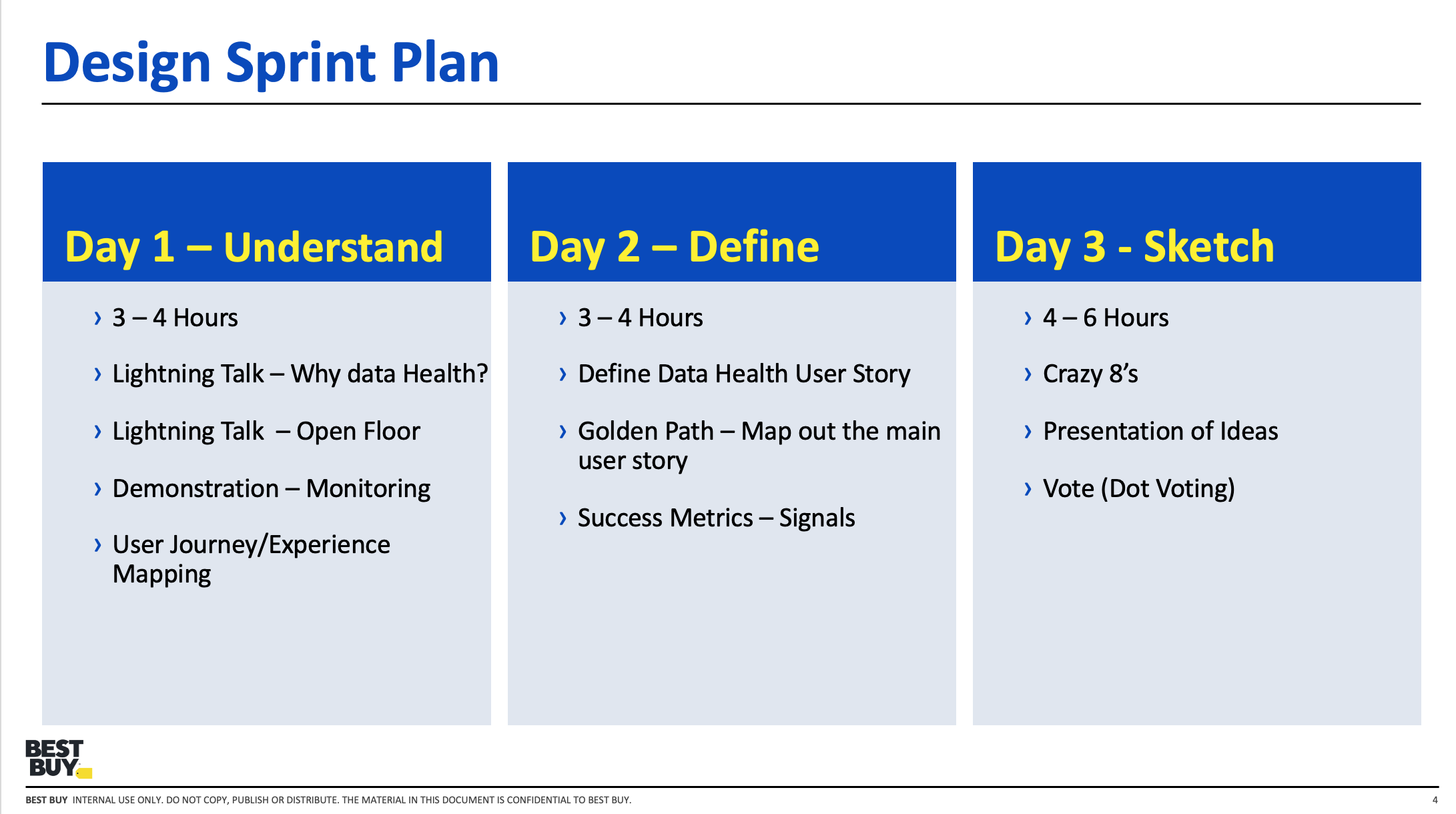

After a successful Service Blueprinting exercise, I led a 5-day Google Design Sprint to address data health monitoring. It was inspiring to guide a multidisciplinary team, including 3 Product Managers, a Director of Engineering, a Lead Data Scientist, and 8 Engineers, through the design toolkit.

My initial presentation aligned the team on goals and agenda, ensuring an energized and collaborative start to the exercise.

Day 1: Mapping the User Journey

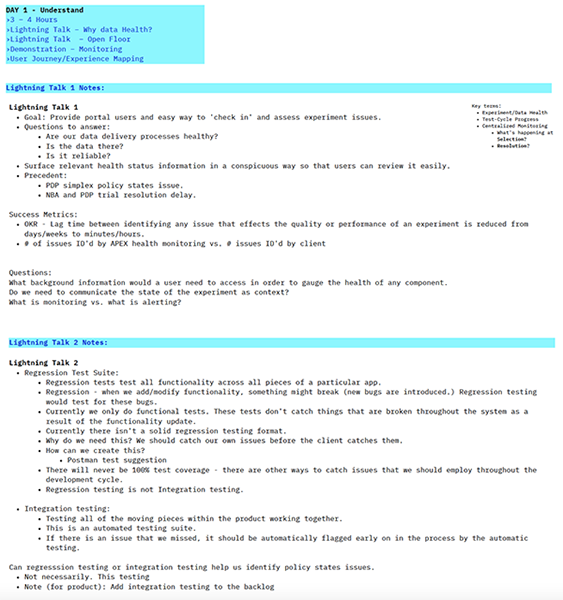

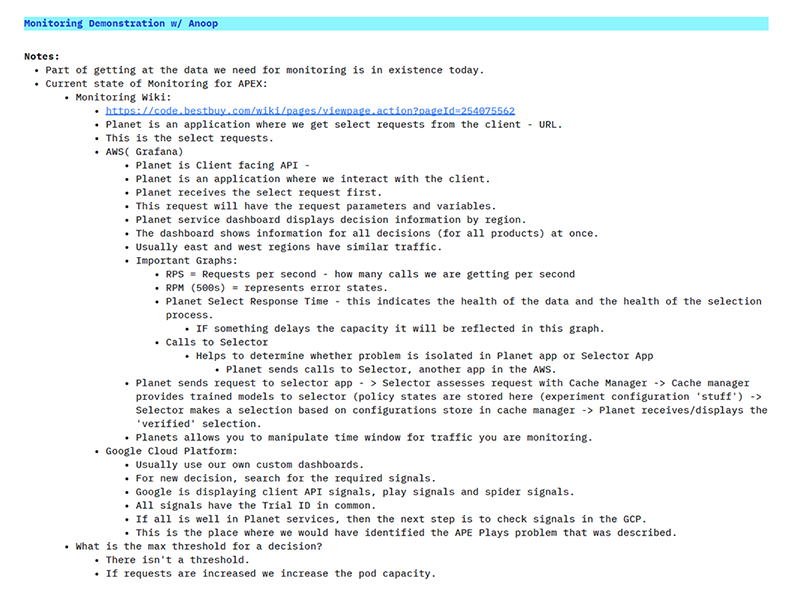

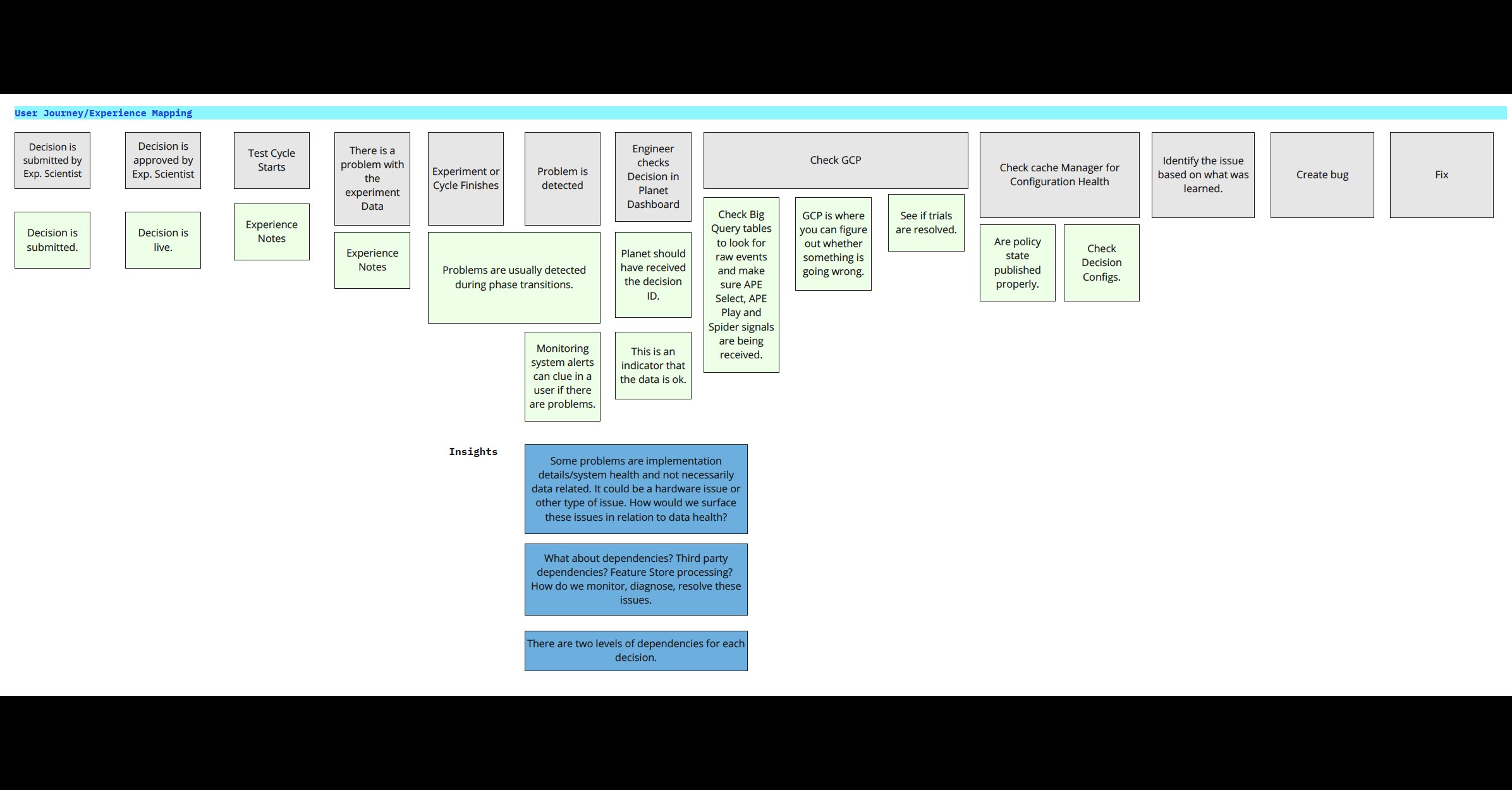

Day 1 focused on understanding. Through Lightning Talks and a Monitoring Demonstration, we leveraged diverse team experiences to map the user journey. This fostered a shared understanding of each user’s testing experience, revealing insights the team hadn't collectively acknowledged before.

I facilitated discussions, synthesizing notes live to encourage fruitful conversation. We achieved alignment on the true experience of running and monitoring a test, incorporating multiple perspectives on each phase. This day was incredibly enlightening, providing crucial context for the product's cognitive complexity.

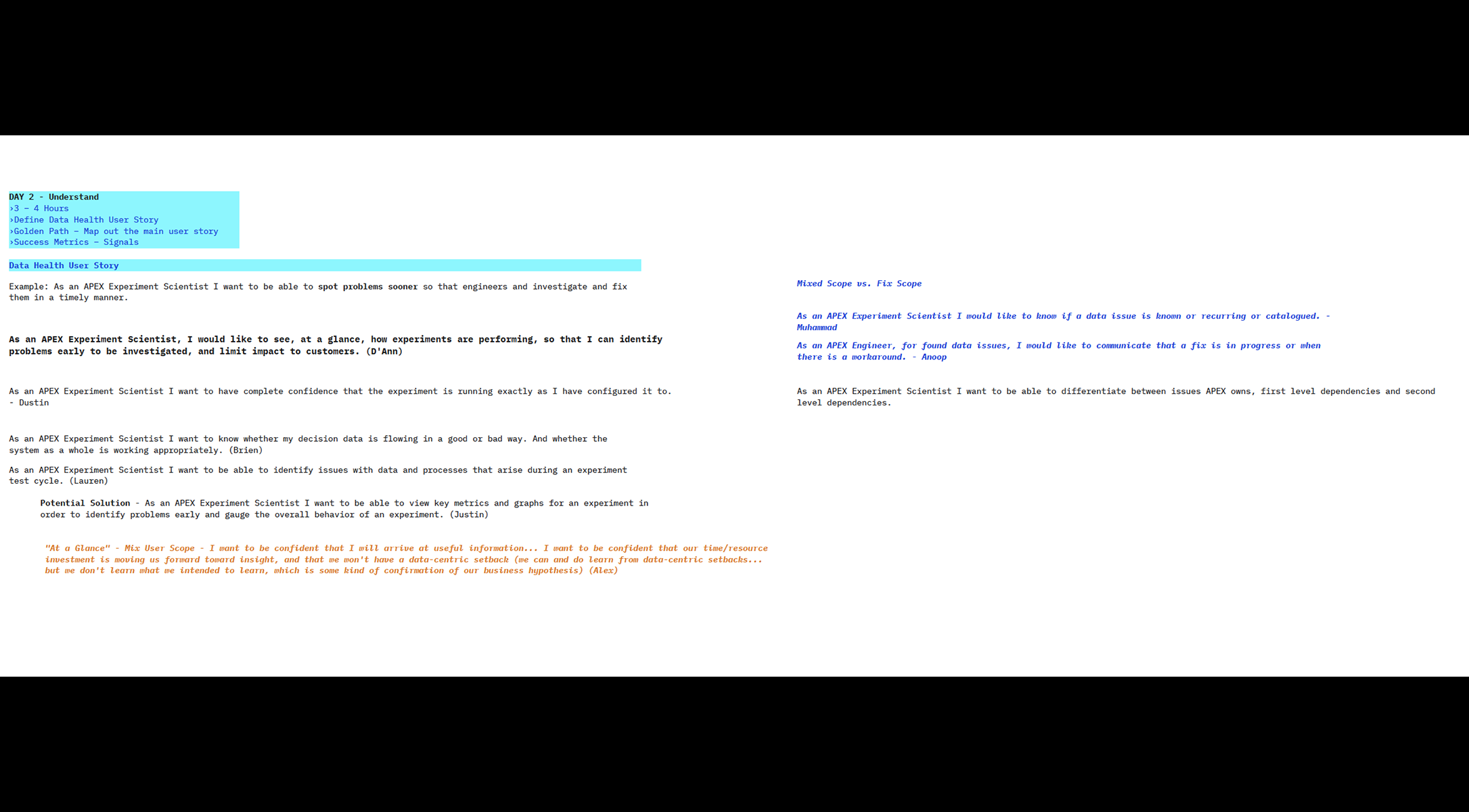

Day 2: Defining Success & User Segmentation

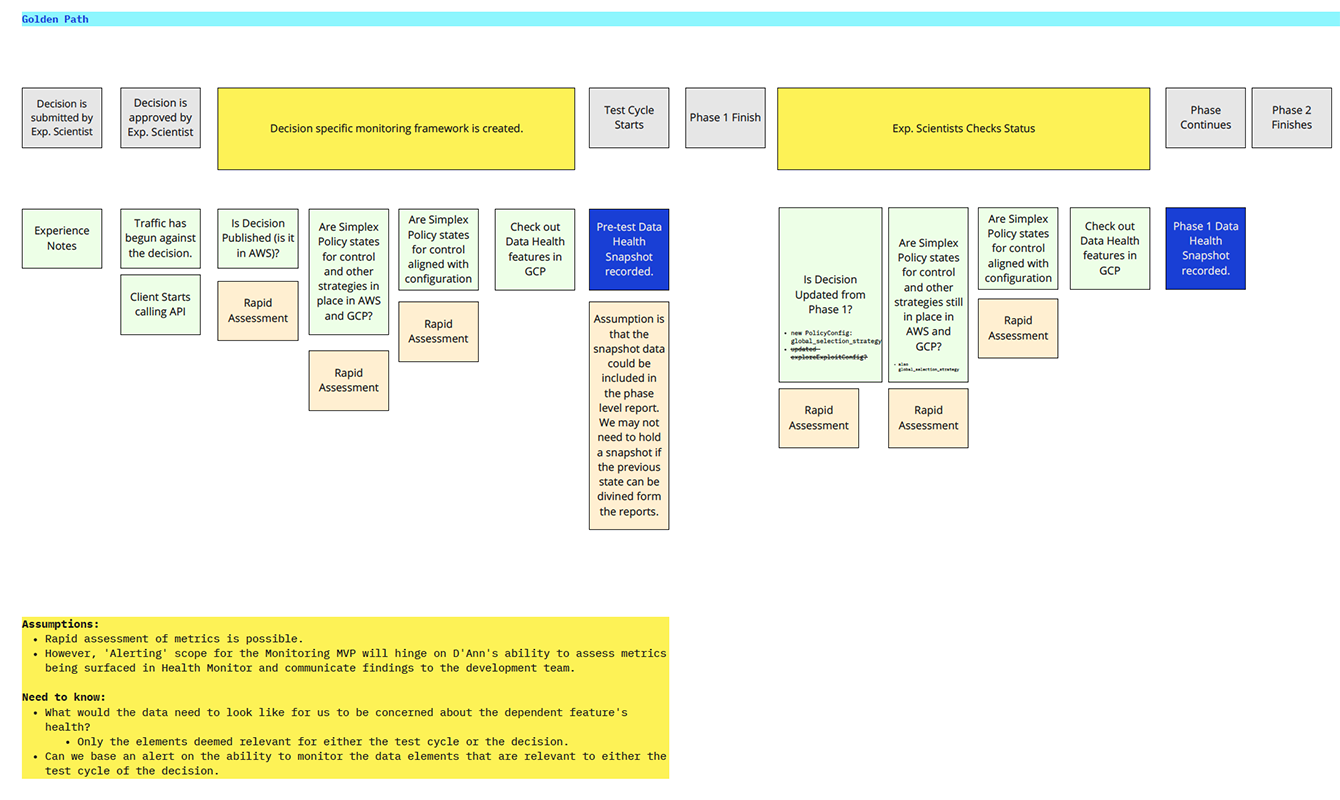

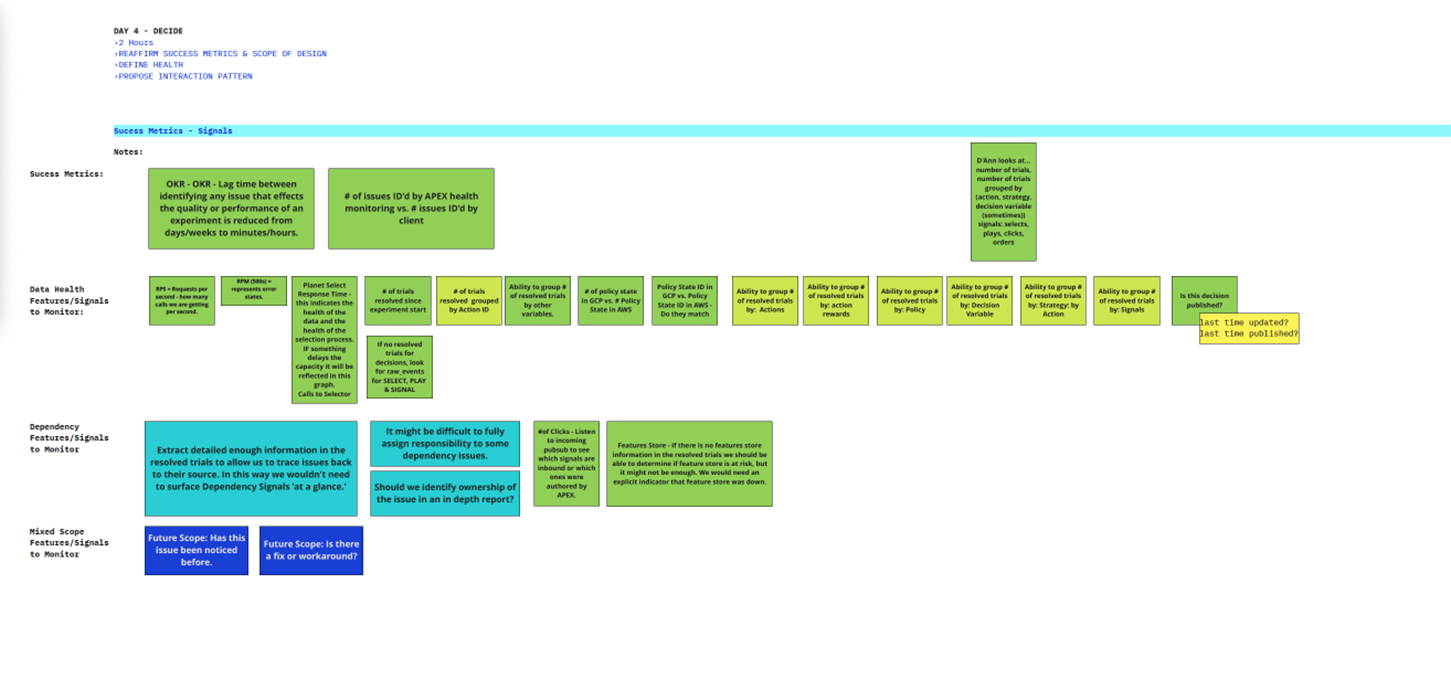

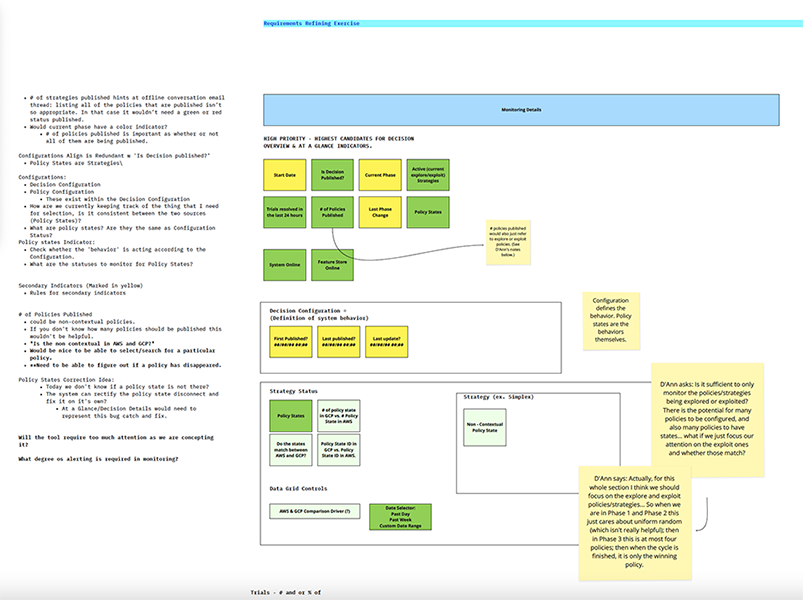

Day 2 focused on defining our path. We synthesized Day 1 learnings to craft an ideal user story, establish success hypotheses, and outline the "Golden Path."

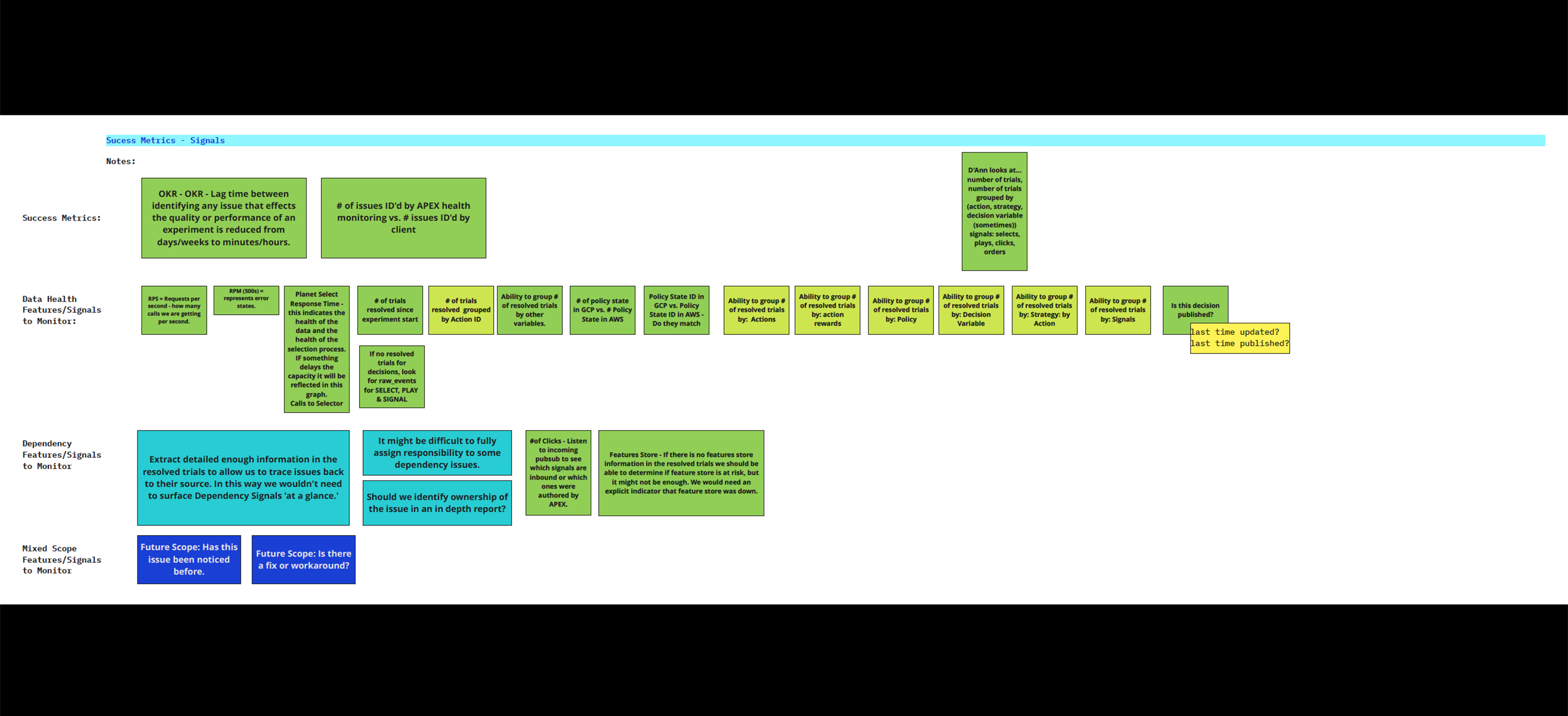

Team proposals revealed significant user segmentation: developers, data scientists, and product owners each needed different signals and analyses. Prioritizing which data to surface for immediate action was key, as each role had varying definitions of "data health."

I used a "Jobs to Be Done" approach to understand how users accessed metrics. By color-coding metrics based on priority and technical feasibility, we aligned on a needs hierarchy. This informed our Golden Path, reflecting current monitoring and alerting protocols.

Day 3: Sketching & Ideation

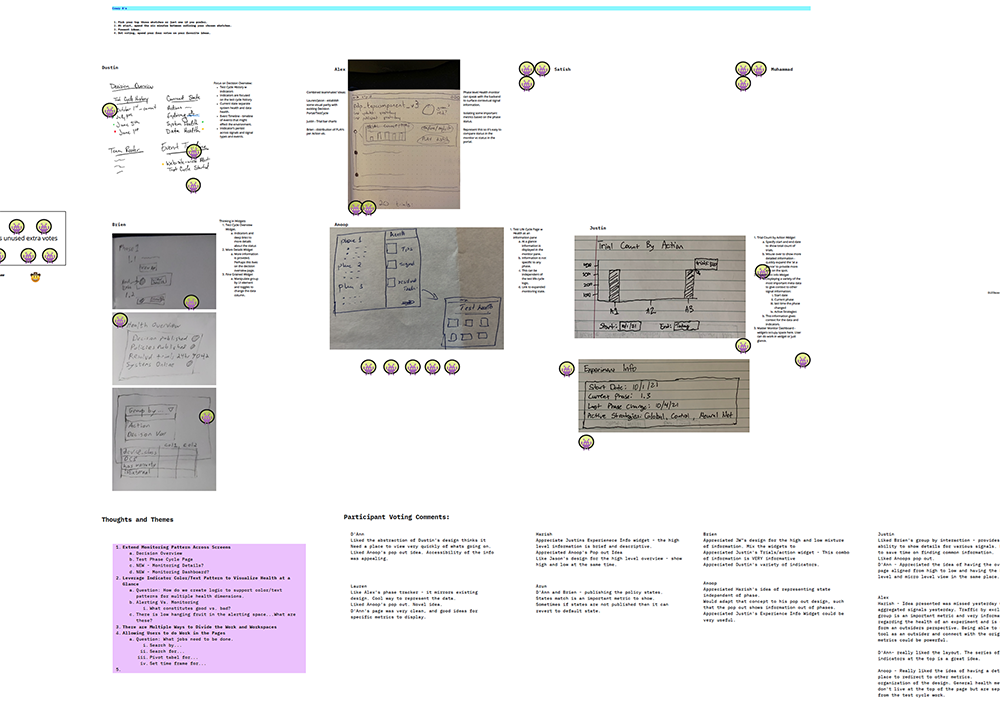

Sketch day is always a highlight, often revealing brilliant ideas from unexpected sources. A key idea emerged for a test overlay panel, or widget, a pattern I was already exploring. The Crazy 8’s exercise powerfully visualized data relationships, confirmed my interaction pattern hypothesis, and deepened our shared understanding of each user's journey.

We solidified that developers needed high-level data health indicators for quick problem identification (Action or Trial metrics). Data analysts required specific Trial-to-Action information in order to do research for a solution. Future considerations included supporting the Digital Product Owner/Designer with critical, clearly surfaced information for accurate reporting.

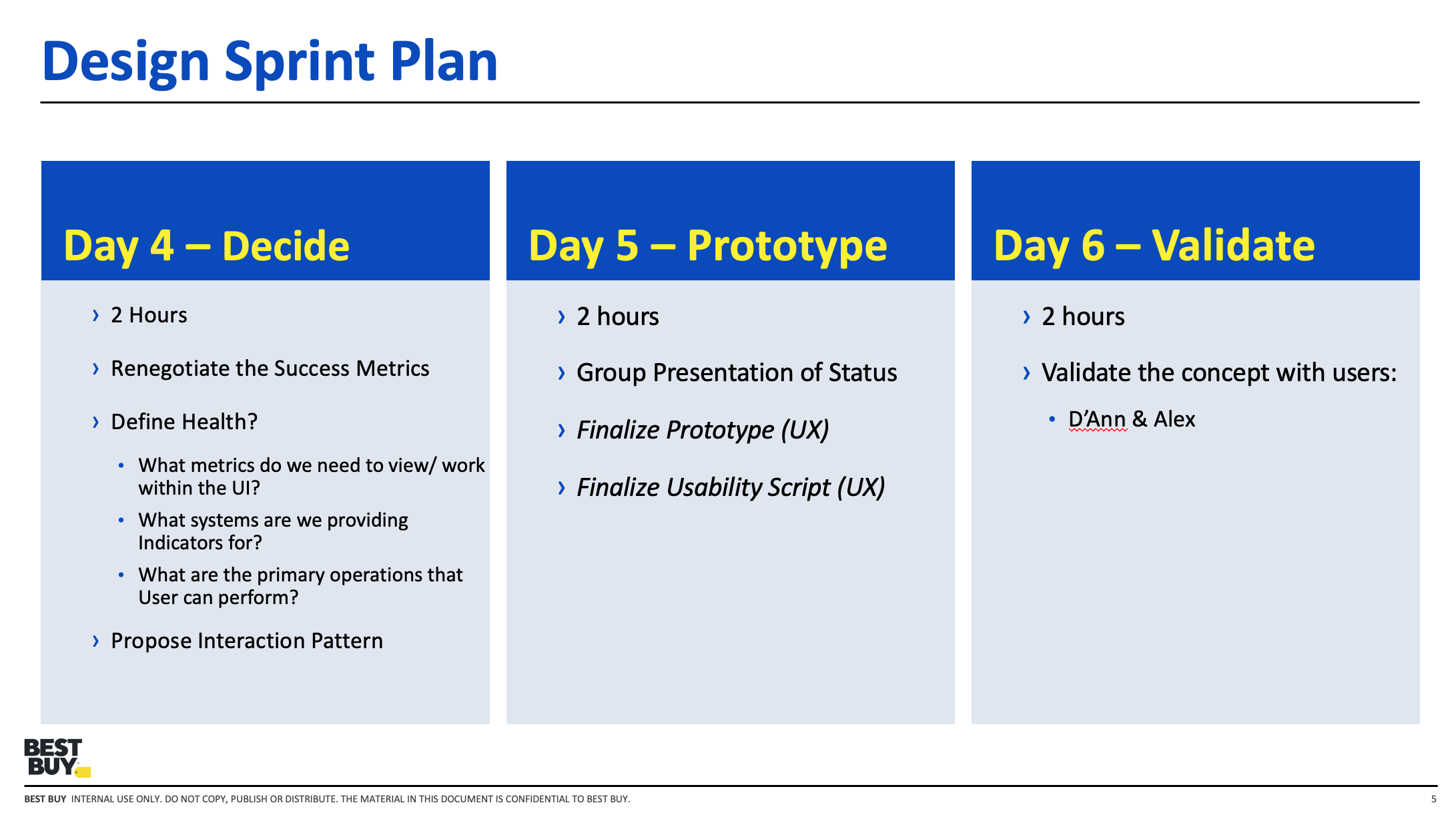

Day 4: Prototyping & Validation

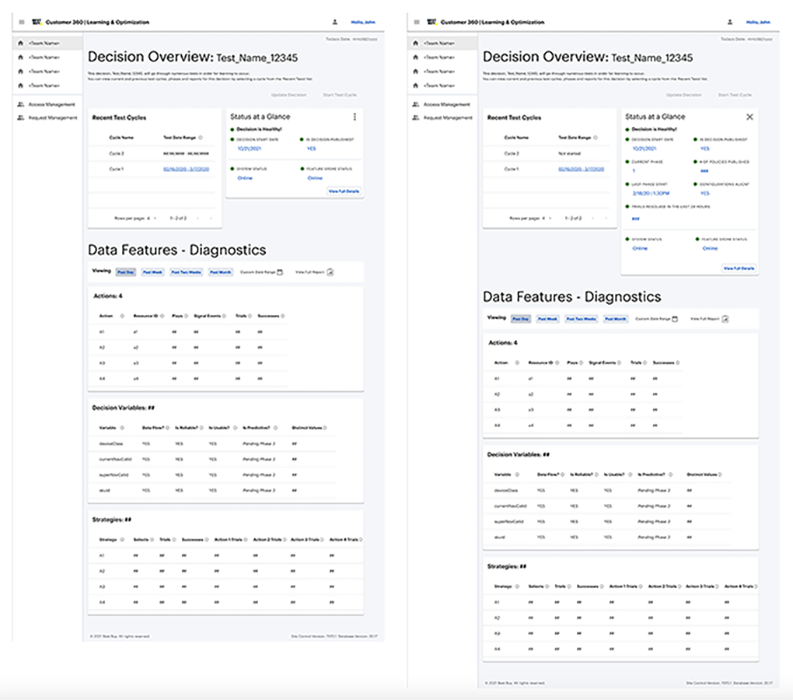

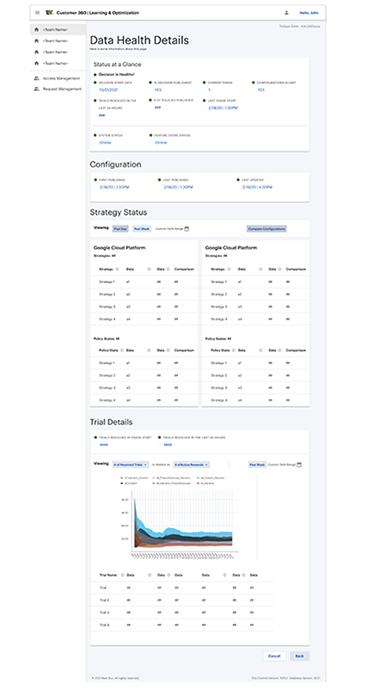

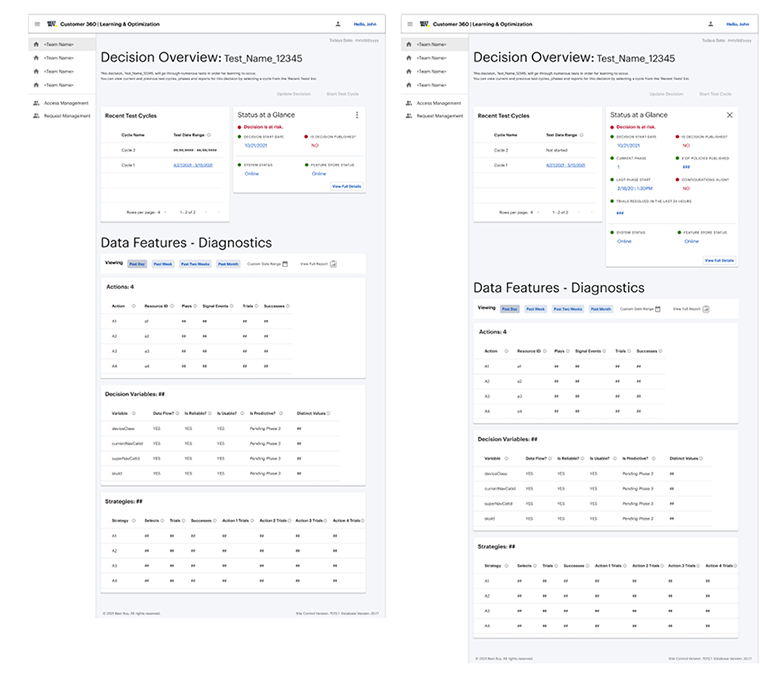

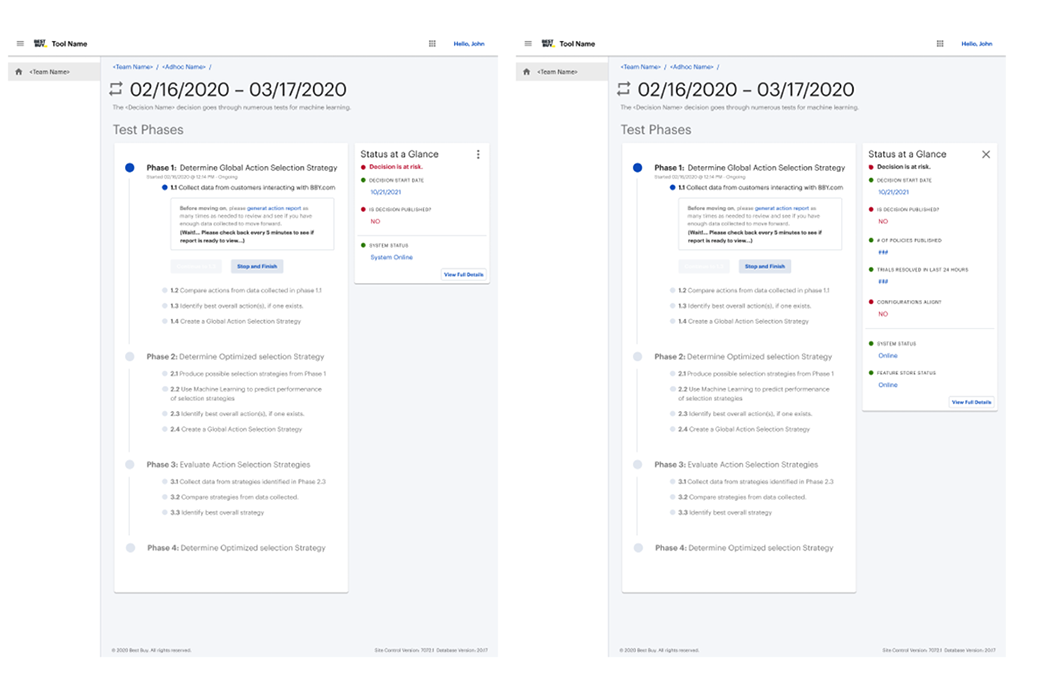

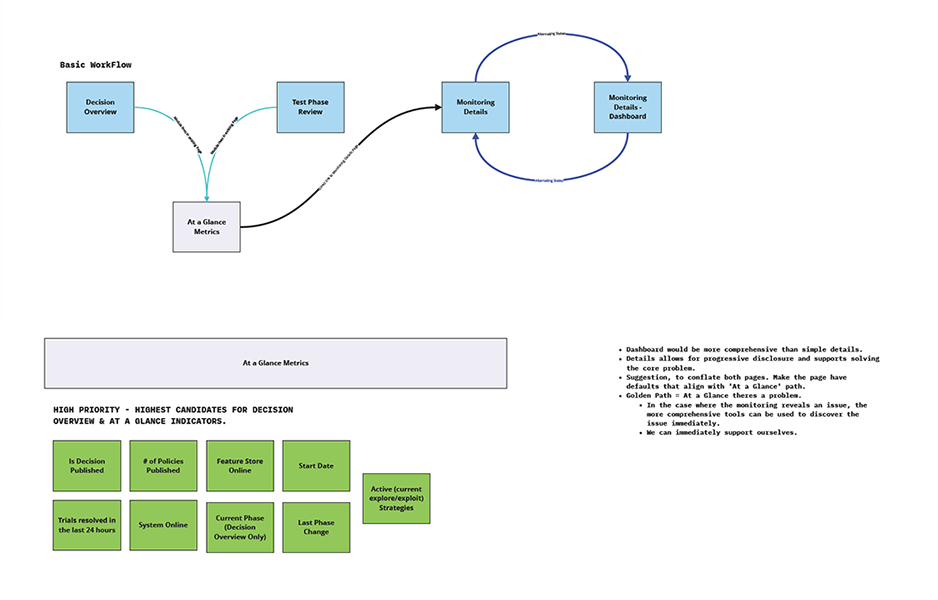

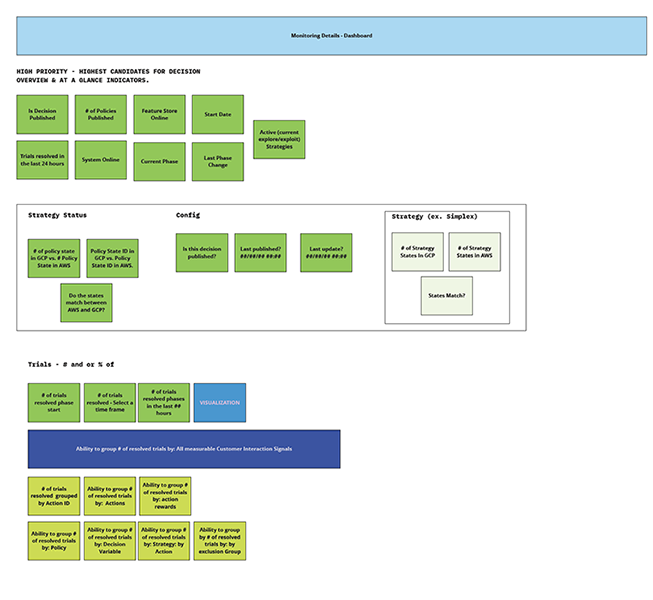

On Day 4, I synthesized insights to create wireframes and designs using our Internal Tools Pattern Library. I focused on two key interaction patterns:

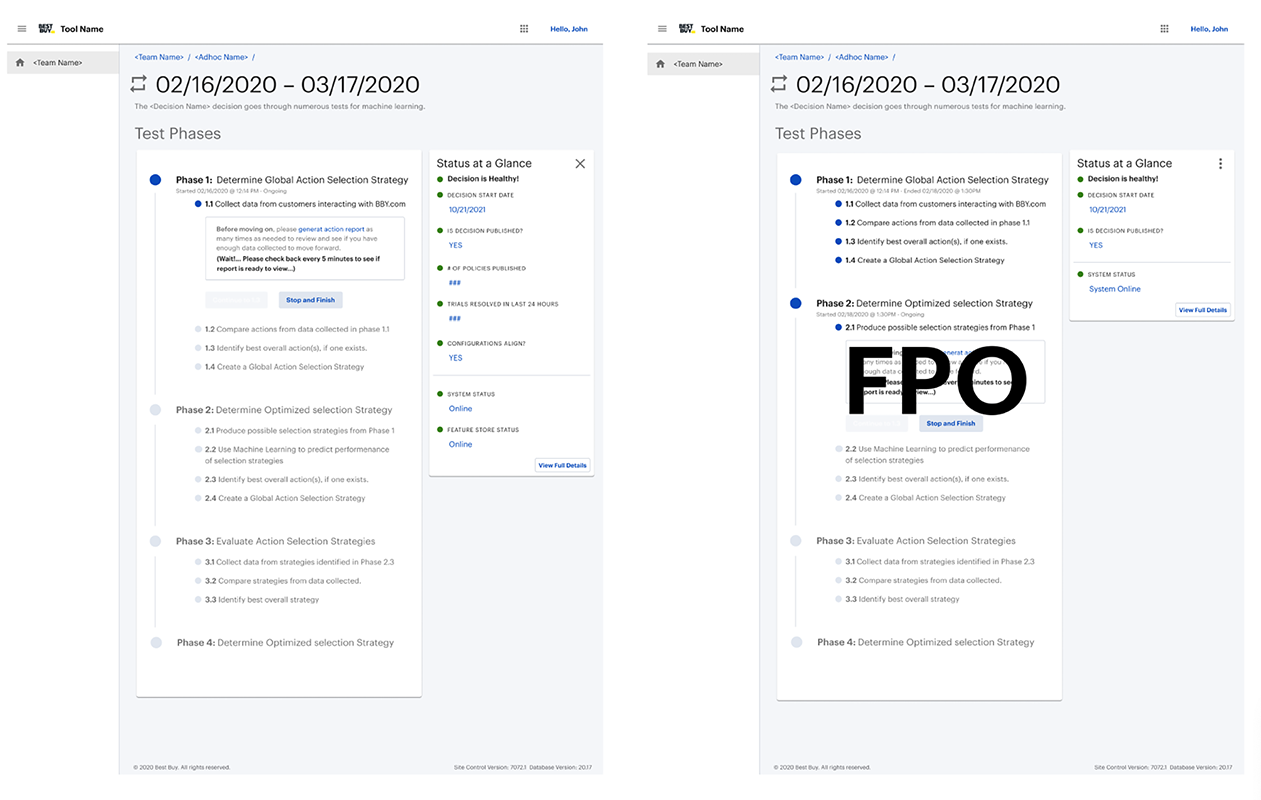

- The 'Status at a Glance' monitoring widget for ML Test overview and phase pages.

- The detailed Decision Health Details Template.

I designed flows for both healthy and data-failure scenarios, wireframing the Decision Health Details page with careful attention to information hierarchy that we'd discussed the day before.

Usability sessions with our Senior Developer and Data Scientist confirmed the monitoring widget's utility and the color highlighting patterns. Users found sufficient information in the detailed monitor to launch their problem-solving workflows. Feedback on font size and the split utility of the Monitoring Details template page (engineering (data configuration) vs. data science (trial details)) confirmed my sprint predictions on user segmentation.

On "Define Day," I shared usability results and interaction patterns, focusing on defining requirements and 'Data Health.' Live documentation editing reinforced the importance of 'Source of Truth' documentation, a theme from earlier Service Blueprinting.

We created diagrammatic groupings of metrics based on interface type and priority (e.g., Strategy Specific, Trial Specific), establishing foundational documentation for the APEX Experience and ML Engineering ecosystem.

Outcomes & Learnings

Our engagement concluded with a formal Design Brief. While discussions were fruitful, we continued to refine data metrics hierarchy and display requirements for ML Test (Decision) data health.

Crucially, the **At-A-Glance widget successfully addressed the immediate need for monitoring crucial Decision metrics** before test failures. This reduced issue identification and reaction time from weeks to minutes (or a day at most), effectively preventing client awareness of data issues and enhancing product reliability.